Predict the Market is great way to learn and understand how a neural network architecture is constructed and implemented with time series data. Being able to accurately predict the movement of a stock is an ardous task. Due to constant changing market conditions, being able to accurately predict the movement of a stock greater than 51% of the time should be deemed a success, but even then, one may not have a successful trading strategy yielding positive returns.

Hypothesis

A trained and hypyer-tuned neural network will accurately predict the movement of a stock and outperform the baseline of 51.7%.

Methodology

1. Import quantitative dataset of a stock with daily opening and closing prices

Deciding how far back to backtest a stock requires some knowledge. For example, backtesting a stock that has existed for thirty years will encounter some issues. For example, on April 9, 2001 the SEC ordered all stock markets to switch from a fractional system (denominated in one-sixteeths) to our current decimal system.

Utilizing Beutiful Soup, a web scraper was constructed to collect and record current S&P500 company names. Then an ETL process was created to pull historical trading data from yfinance based on the S&P500 names that were collected.

2. Split the data into two segments for training and testing

Conservatively splitting the data, 80% to train the neural network and 20% to test the neural network.

3. Establish a Baseline

With out historical Google trading data set, our baseline of 51.7% was established by identifying the percentage of the days in which the stock ended higher then it’s opening price compared to days when it didn’t.

4. Fit the Neural Network Feature Scaling and Standardization

Implemented Standard Scaler from Scikit-learn. This ensures that there is no bias while training the model due to the different scales of all input features. If this is not done the neural network might get confused and give a higher weight to those features which have a higher average value than others.

5. Build the Neural Network Architecture using TensorFlow

Units: This defines the number of nodes or neurons in that particular layer. We have set this value to 128, meaning there will be 128 neurons in our hidden layer.

Kernel_initializer: This defines the starting values for the weights of the different neurons in the hidden layer. We have defined this to be ‘uniform’, which means that the weights will be initialized with values from a uniform distribution.

Activation: This is the activation function for the neurons in the particular hidden layer. Here we define the function as the rectified Linear Unit function or ‘relu’.

Inputs: This defines the number of inputs to the hidden layer, we have defined this value to be equal to the number of columns of our input feature dataframe. This argument will not be required in the subsequent layers, as the model will know how many outputs the previous layer produced.

6. Train and Hyperparameter Tuning the Neural Network with Keras

- Grid Search Parameters

- Tuning Optimizer

- Tuning Epochs

7. Predicting the Movement of a Stock

Now that the neural network has been compiled, the predict method was implemented to predict the movement of a stock on the test data.

Results

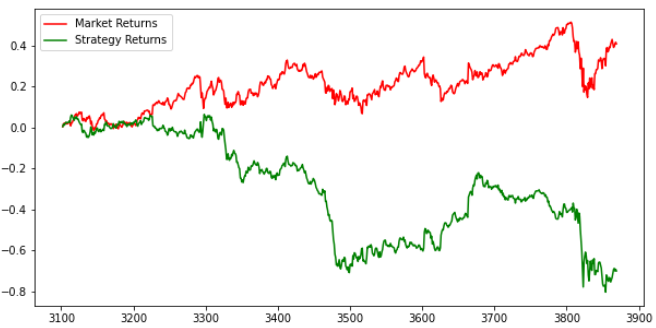

Even though the Neural Network recorded a 52% accuracy score on the training data and outperformed the baseline of 51%, using Matplotlib as a data visualization to show that the Neural Network returned a 70% loss when compared to a buy and hold strategy.

Conclusion

This is a great base to learn and understand how a neural network architecture is constructed and implemented with time series data.